Mike's Notes

This is a great article on the differences between ML and rules.

Resources

- https://www.capitalone.com/tech/machine-learning/rules-vs-machine-learning/

- https://martinfowler.com/articles/microservices.html#CharacteristicsOfAMicroserviceArchitecture

References

- Reference

Repository

- Home > Ajabbi Research > Library >

- Home > Handbook >

Last Updated

18/05/2025

A Modern Dilemma: When to Use Rules vs. Machine Learning

Machine learning is taking the world by storm, and many companies that use rules engines for making business decisions are starting to leverage it. However, the two technologies are geared towards different problems. Rules engines are used to execute discrete logic that needs to have 100% precision. Machine learning on the other hand, is focused on taking a number of inputs and trying to predict an outcome. It’s important to understand the strengths of both technologies so you can identify the right solution for the problem. In some cases, it’s not one or the other, but how you can use both together to get maximum value.

Business Logic, Calculations and Workflows

Let’s start first with understanding business logic. I’ve worked with various types of logic in systems over the years and it’s important to understand the context.

What is business logic? At its simplest form, it’s logic that contains decisions that govern a business process. These decisions are business decisions. The logic tends to be variable with the market and may change often depending on that particular industry’s drivers. The logic focuses on the why and the when. Ultimately a condition has to be true before an action can be taken.

Business logic typically leverages business calculations. Unlike business logic, business calculations tend to stay the same. They focus on the what and the how. It’s important to decouple these two from a deployment aspect as they change at different rates. As a general rule, any reusable logic should be independently deployable. If reusable logic is tied to an application deployment, it can’t be individually reused and is coupled to other components. Ideally, we want to break apart the reusable pieces of an application into microservices so they are independently reusable and deployable. See Martin Fowler’s illustration under “Figure 1: Monoliths and Microservices” as an example.

How do we connect the different steps of business logic together? Workflows.

They are the structured flow or sequencing of work tasks in a business process. Workflows can either be human-based, system-based (e.g. orchestration) or a hybrid between the two. In a previous blog I discussed when to react vs orchestrate.

Approaches for Implementing Business Logic

Now that we understand how these pieces fit together, let’s discuss some approaches for building business logic. In general, there are three different approaches for implementing business logic: Application code, decision table, and a rules engine.

Application Code

| Condition 1 | Condition 2 | Condition 3 |

|---|---|---|

| HasSpeedingTicket | HadAccident | SetDiscount; |

| False | False | 20 |

| True | False | 10 |

| False | True | 5 |

| True | True | 0 |

Decision tables are a good fit for logic that changes often and has a large number of conditions that are easier to manage in a table than code.

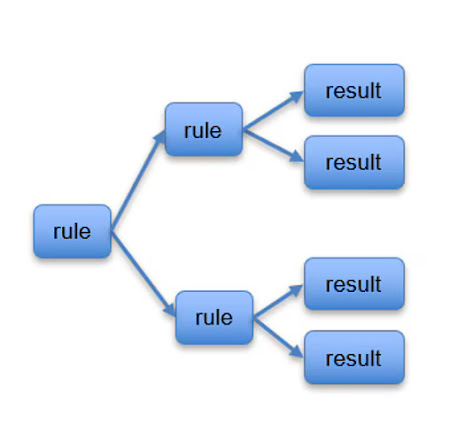

A rules engine is a good fit for logic that changes often and is highly complex, involving numerous levels of logic. Rules engines are typically part of a Business Rules Management System (BRMS) that provide extensive capabilities to manage the complexity.

If we put this guidance into quadrants, it would look something like the below:

As the rate of complexity and rate of change increases, application code is no longer suitable for business logic. Decision tables provide some relief as the rate of change increases, but ultimately a BRMS provides the best fit for high rate of change and high complexity.

Business Rules Management System (BRMS)

A Technical rule or Guided rule provides two different ways to author rules geared towards different end users. Technical rules are more for your developer audience, where guided rules consist of a point and click approach that may be better for less technical users.

The rule repository is one of the most powerful capabilities of a BRMS. It is the mechanism in which developers can find out what has already been built and what they may be able to reuse. Rule metadata is stored here that is critical in understanding the underlying intent.

Typically, rules can be deployed in one of two ways - either as part of a standalone service that is invoked via REST API calls, or embedded as part of the application (in-process). A little later in this article, we will see that machine learning platforms share a similar model.

Predictive Model Markup Language (PMML), or Portable Format for Analytics (PFA), are both industry standard formats for making models interchangeable. They enable you to build a model in one language or platform and port it into another language or platform that supports PMML or PFA.

One such example of a BRMS is Drools. Drools is an open source Apache licensed, Java-based rules engine. It supports a forward and backward chaining inference engine that leverages the PHREAK algorithm. This inference engine comes in handy if you want the rules engine to decide the order of your rules. Drools provides guided rules, technical rule DRL syntax, and support for Domain Specific Language (DSL). Drools also supports both in-process and standalone deployment models.

Machine Learning Platforms

Now that we have a good understanding of Rules Engines, let's compare them to Machine Learning Platforms. In a previous post, I provided an overview of what machine learning is and how you can use it with open source BPM. In a similar post, I explain how you can use machine learning with Akka. Let’s now take a look at a capability reference view for a machine learning platform.

Let’s touch on some of the key capabilities and again tie back to similar overlap with the BRMS view.

Data is the most important thing in machine learning. Your model is only as good as your data. You want as much data as possible and that may include both batch and real-time data sources.

Features are the inputs into models and some ML Platforms provide capabilities for you to create those features. Others provide capabilities that can automatically generate the features for you.

These different algorithms can be used in a machine learning model. An important thing to note here is they aren’t tied to Supervised, UnSupervised, or Reinforcement Learning categories, rather they can be used across all three.

You will notice some similarities to the BRMS capabilities in this space, specifically in-process and standalone REST API deployments along with support for PMML.

One of the most important aspects of managing a machine learning model is monitoring it for accuracy. A common fallacy with machine learning is that a ML model never needs to be retrained as it can learn itself. That is not the case as machine learning models have to be re-trained every so often as the data they are trained on starts to drift from the data they are executing against in production.

By comparing the capabilities of machine learning platforms with rules engines we can now see how there are similarities along with differences at the capability level. Given how products in these areas are continuing to become closer together, it’s understandable how the choice between the two can be difficult.

Guidance for When to Use Rules Engine vs. Machine Learning

So how do we make the decision of when to use a Rules Engine or Machine Learning? To answer this, let’s answer this question from the dimensions of logic, logic type, what creates the logic, and data. Rules are a good fit in the situation where:

- Logic: Exact logic is known. With rules you know ahead of time the logic you want to execute.

- Logic Type: Precision based. If then business logic is precise and does not involve any predictions. It results in boolean type outcomes based on evaluation of facts.

- Logic Creation: Done by a human. Software Engineers or business users create the rules that represent business logic.

- Data: Don’t need to automatically derive the logic from the data. Analysis typically occurs on data beforehand to determine what the exact logic should be.

Now, let’s look at machine learning using these same dimensions:

- Logic: Exact logic is not known. Rather the inputs/features that are significant in creating a prediction may be known.

- Logic Type: Prediction based using algorithms.

- Logic Creation: Created by machine learning software that runs using algorithms via training.

- Data: Is used to ultimately generate the model logic. Is the most important thing in machine learning. You want to use as much data as possible and also make sure the data is unbiased. If the data is biased, then the model will become biased.

In summary, leverage rules when you need precision and know the logic. Leverage machine learning when you want to predict something but don’t know exactly how.

Patterns for Using Machine Learning and Rules Engines Together

Imagine the use case where you are a realtor wanting to provide the best guidance to your clients on purchasing a home. Maybe there are several they are interested in, but aren’t sure how quickly they should act. Let’s walk through three different patterns for combining machine learning and rules together to achieve this.

In this pattern, two different machine learning models execute. One determines the probability of a house selling in 10 days. Another determines the probability of the sellers dropping the asking price. Both of these predictions are an input into rules. The rules then evaluate the output of the model and ultimately provide a recommendation to the realtor. Specifically, if the probability of the house selling in 10 days is greater than 50%, and the probability of the sellers dropping the price is less than 50%, then this pattern makes a specific recommendation for the realtor.

In this pattern, we start with the rules being the input into the machine learning models. Rules execute business logic to determine boolean based values. Does the house need repairs? Is it the selling offseason? Do the sellers want to get rid of the house and sell it now? The output of these rules then are features into the machine learning models. The machine learning models then provide a probability back to the realtor of the house selling in 10 days and the sellers dropping the price. Notice in this pattern there is not a recommendation back to the realtor, rather the probability is provided and final recommendation left up to the realtor.

Pattern 2: Leverage rule outputs as a feature input into machine learning models

In this pattern, it follows a mix of the previous two patterns. Both rules and machine learning outputs are inputs into a machine learning model. In this scenario the probability of the sellers dropping a price is an input into the probability of the house selling in 10 days. This pattern also leaves the ultimate recommendation up to the realtor.

Pattern 3: Leverage both rule and machine learning outputs as inputs

An Example Implementation

Now let’s apply these patterns to an actual proof of concept. I am going to build off of a previous reactive microservice machine learning proof of concept that I built in a previous post. We will enhance it to contain a rules service that the machine learning model takes as an input. It will use Pattern 1 above, Leverage machine learning outputs as an input into rules.

Let’s start with what we are changing in the proof of concept to support the integration of rules with machine learning. Below is a diagram that illustrates the architecture:

All components of the previous proof of concept hold true, (please see that previous blog for the details as I won’t repeat them here). The one new thing we introduced is the Java-based Rules MS. This is the rules microservice that will evaluate the output of the machine learning model probability. H20 outputs a confidence value as part of its predictions. For a transaction that the machine learning model determines is OK/Not Fraudulent, the rules service will check this confidence value. If the confidence value is less than 50%, then it will evaluate the output of some additional fraud checks, in this case name and address. If either of those failed, the rule will recommend that the transaction is Fraudulent.

Here is a sequence flow that walks through the steps:

Now let’s take a look at the Java Rules MS code to see how a drools rule would operate.

We can see this is using the Drools drl syntax, which is a way to write technical rules. There are two rules both checking if the transaction is OK and the machine learning output is less than 50%. The first rule also checks if a name check fails, where the second checks if an address check fails. You’ll notice in Drools there are not any else clauses. That is by design and rules fire based on conditions you specify. Within each rule you notice a RulesData function that is checking the status of several variables. In order for Drools rules to be evaluated against data, you have to create a POJO that represents the data model. This will include the getters and setters. See example below:

Let’s look at a snippet of the Java code that invokes the Drools rules, see below:

This code creates a KieSession and then inserts the data we want the rules to execute against into the KieSession. FireAllRules() tells Drools to do just that, fire all rules. Then Destroy() is used for cleanup. The Java based Rules MS takes the output of the drools rules and ultimately writes it to Kafka where it can be consumed.

Summary

Rules and machine learning each have their own strengths and are even more powerful when used together. Using the right solution for the problem is key. Leverage rules when you need precision and know the logic, leverage machine learning when you want to predict something but don’t know exactly how. Both can be used in a reactive microservices architectural style that provides a more maintainable, scalable, and faster to deliver architecture.

I hope you found this blog valuable and thank you for your time!

No comments:

Post a Comment